The missing factor is intent. Make a random image, that’s that. But if proven that the accused made efforts to recreate a victim’s likeness that shows intent. Any explicit work by the accused with the likeness would be used to prove the charges.

Just a guy jumping from a hot mess into more prosperous waters.

The missing factor is intent. Make a random image, that’s that. But if proven that the accused made efforts to recreate a victim’s likeness that shows intent. Any explicit work by the accused with the likeness would be used to prove the charges.

Reading these comments has shown me that most users don’t realize that not all working artists are using 1099s and filing as an individual. Once you have stable income and assets (e.g. equipment) there are tax and legal benefits to incorporating your business. Removing copyright protections for large corporations will impact successful small artists who just wanted a few tax breaks.

A lot of nuance will be missed without some gradation between “I <3 China” and “Down with Pooh!” For example, if we added “Slightly favorable”, “Neutral”, and “Slightly unfavorable” we would begin to see just how favorable younger generations are. Rather than presume there is a deep divide on trade policy, if two bars are almost equal, we may see they are largely neutral. Similarly we could see just how favorable their views of TikTok really are by looking at the spread between neutral to “I <3 China!”

I’m convinced the AI had the hand on a loop. It’s like watching someone’s first presentation in speech and debate class. It will look better eventually, but I doubt it’ll figure out the subtle emphasis great body language adds to speech.

The short version is that there are two images and sidecar/xmp file sandwiched into one file. First is the standard dynamic range image, what you’d expect to see from a jpeg. Second is the gain map, an image whose contents include details outside of SDR. The sidecar/xmp file has instructions on how to blend the two images together to create a consistent HDR image across displays.

So its HDR-ish enough for the average person. I like this solution, especially after seeing the hellscape that is DSLR raw format support.

It’s likely the crew was using fresnel lights which are bright and very hot. You can easily burn yourself or set fire to delicate objects after prolonged use. Not impossible to imagine a crew member moving the lights, leaving them on and highly focused to imitate a distant light source; like a magnifying glass under the sun.

Especially when they’re non food/textile producing ones that are literally used to just get drunk.

Just for clarity agave can be used as a sweetener, edible, it’s fibers can be used in textiles, can be made into soap, and has some medicinal uses. Once the market saturates with spirits it’s other uses will likely be marketed to fully utilize crop yields.

I feel that this can be addressed at application step. Any date of birth proven to be under 18 cannot apply without an in person interview. This protects minors from taking on debt without fully understanding the implications, and puts responsibility on the lender for providing credit to a minor. If credit is provided and defaults the debt should be the lender’s problem for taking such a huge risk.

Alternatively, the same premise with the exception that an adult is required as a cosigner. If the account defaults the burden is shifted to the adult as they have the cognizance to understand and take responsibility.

I wouldn’t outright ban giving accounts to minors. My parents opened a savings account in my name and kept it in good standing. This gave me a big credit boost that my peers never had. But I realize I am an exception, and the problem others face is very real.

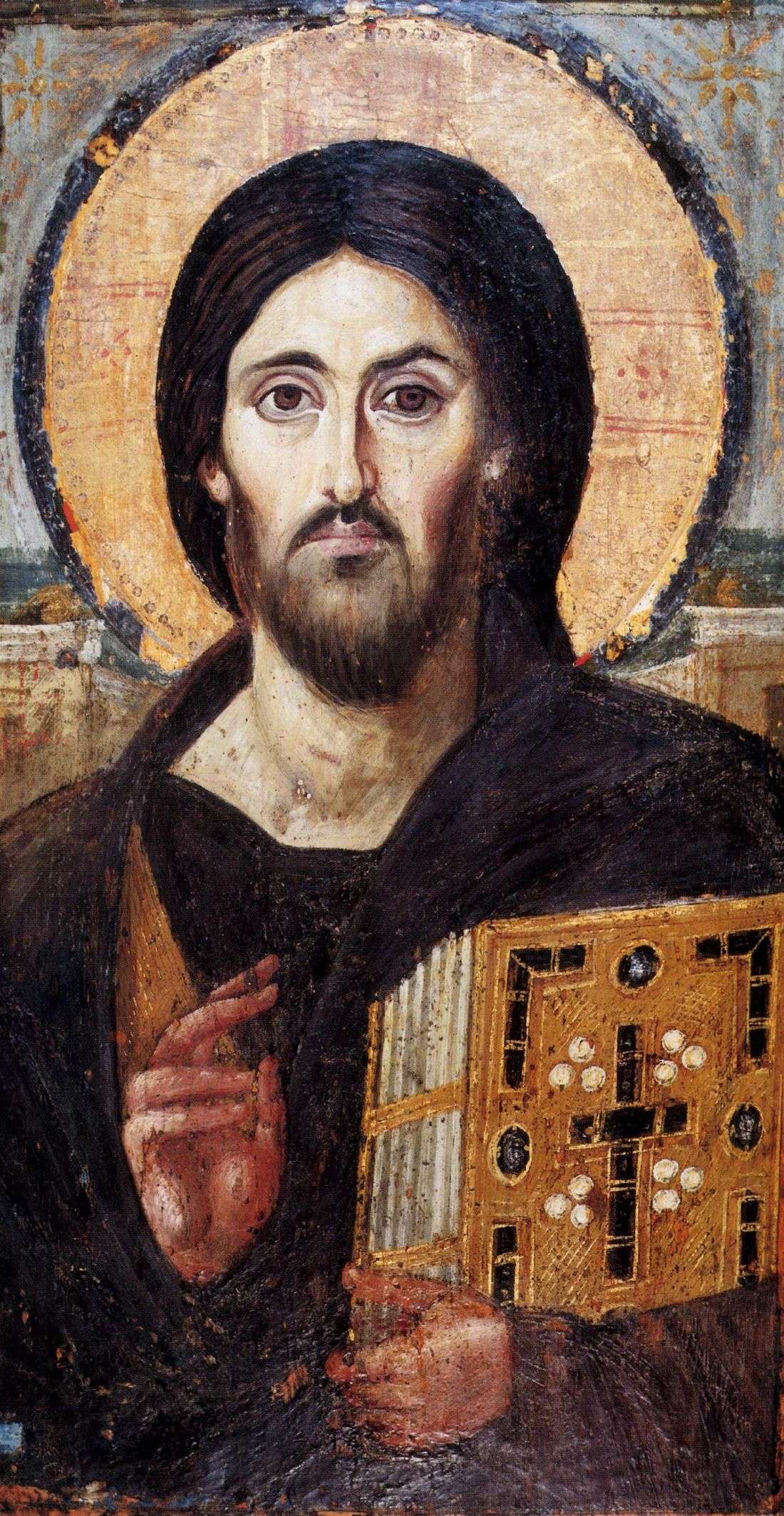

Just a side piece to the discussion: many of the surviving paints of Christ are biased by the author towards appearing more like the people of the area, in addition to symbolism of the era. Christ Pantocrator of St. Catherine’s Monastery is one of the oldest depictions of Christ and deviates from the modern European style.

They may be thinking of the lobbying done by AIPAC to influence the relationship between the U.S. and Israel.

I don’t blame you. Even in a professional setting tagging is mind numbing and tedious. The only difference is without tagging you might miss an image that can be licensed and the business opportunity that needed it.

Given your experience what do you believe would be a good starting point towards caring for these individuals? What issues and solutions do you see that aren’t addressed? I understand I’m an outsider looking in on this issue, avoiding the mentality ill homeless like many others. But if my vote can go towards a better solutions I’d like to learn about them.

If we apply the current ruling of the US Copyright Office then the prompt writer cannot copyright if AI is the majority of the final product. AI itself is software and ineligible for copyright; we can debate sentience when we get there. The researchers are also out as they simply produce the tool–unless you’re keen on giving companies like Canon and Adobe spontaneous ownership of the media their equipment and software has created.

As for the artists the AI output is based upon, we already have legal precedent for this situation. Sampling has been a common aspect of the music industry for decades now. Whenever an musician samples work from others they are required to get a license and pay royalties, by an agreed percentage/amount based on performance metrics. Photographers and film makers are also required to have releases (rights of a person’s image, the likeness of a building) and also pay royalties. Actors are also entitled to royalties by licensing out their likeness. This has been the framework that allowed artists to continue benefiting from their contributions as companies min-maxed markets.

Hence Shutterstock’s terms for copyright on AI images is both building upon legal precedent, and could be the first step in getting AI work copyright protection: obtaining the rights to legally use the dataset. The second would be determining how to pay out royalties based on how the AI called and used images from the dataset. The system isn’t broken by any means, its the public’s misunderstanding of the system that makes the situation confusing.

There’s one that comes to mind: registration of works with the Copyright Office. When submitting a body of work you need to ensure that you’ve got everything in order. This includes rights for models/actors, locations, and other media you pull from. Having AI mixed in may invalidate the whole submission. It’s cheaper to submit related work in bulk, a fair amount of Loki materials could be in limbo until the application is amended or resubmitted.

The advertising angle is likely what sank their case. Proving the food does not meet a technical specification, like not having a quarter pound of beef in a fully cooked patty, is easier to prove. But advertising has always been hyperbole.

This is your daily reminder to engage and boost Twitter alternatives such as Mastodon. It’s not enough to ignore Twitter. We must build communities to draw in users, show them social media can exist without Elon or Zuck. Only when good alternatives exist, with content and people sought after, do users feel safe to abandon old platforms.

Don’t forget your manpower is limited. No one is a lemmy.world employee and people need sleep. That leaves 8 hours in a day for literally everything else a person needs/wants to do. Having one service that just works is a load off an all volunteer team’s back.

They do have intelligence, but that intelligence is deliberately underfunded to prevent this very situation. It’s impossible to navigate the mountains of paperwork and legal loopholes the ultra-wealthy use with so few hands. That’s why poorer filers get audited more often: less leg work, easier wins, at the expense of real revenue and justice against tax evaders.

Said it before, I’ll say it again:

It’s been shown the software is still not ready for production by interfering with emergency services, public transit, and normal traffic. These companies need to send these vehicles with a driver until the software is ironed out. We suspend human drivers for such actions. We must extend the same expectations and consequences to driverless vehicles.

If a human driver blocked an ambulance and caused a patient death, they’d be imprisoned for wrongful death. Cruise wants to roll out their software in this state, let them shoulder the legal and financial consequences.

As someone who has spent their life translating for family this isn’t surprising. Nor is it any easier when they bring me poorly translated documents and hope I decipher machine diarrhea. The tech is still years behind being real world ready, especially with anything above 6th grade grammar and nuanced word choice that depends on context and sometimes dialect. But free is free so 🤷