“You see, killbots have a preset kill limit. Knowing their weakness, I sent wave after wave of my own men at them until they reached their limit and shut down.”

“You see, killbots have a preset kill limit. Knowing their weakness, I sent wave after wave of my own men at them until they reached their limit and shut down.”

Currently all iOS browsers are safari with custom interfaces over-the-top.

The volumes of cash that Microsoft throw at retailers (custom builders / big box) is astronomical. Worked for a relatively small retailer with some international buying power. EOFY “MDF” from Microsoft was an absurd figure.

Our builders would belt out 3 - 6 machines per day, depending on complexity of the custom build, the pre-built machines were in the 6+ per day range.

Considering the vast majority of those machines were running windows (some sold without an os), from a quick estimate after too many beers we were out of pocket 10% at most of the bulk buy price for licence keys after our “market development funds” came through.

It’s fucking crook.

deleted by creator

I’ve not tested the method linked but yeah it would seem like it’s possible via this method.

My lone VM doesn’t need a connection to those drives so I’ve not had a point to.

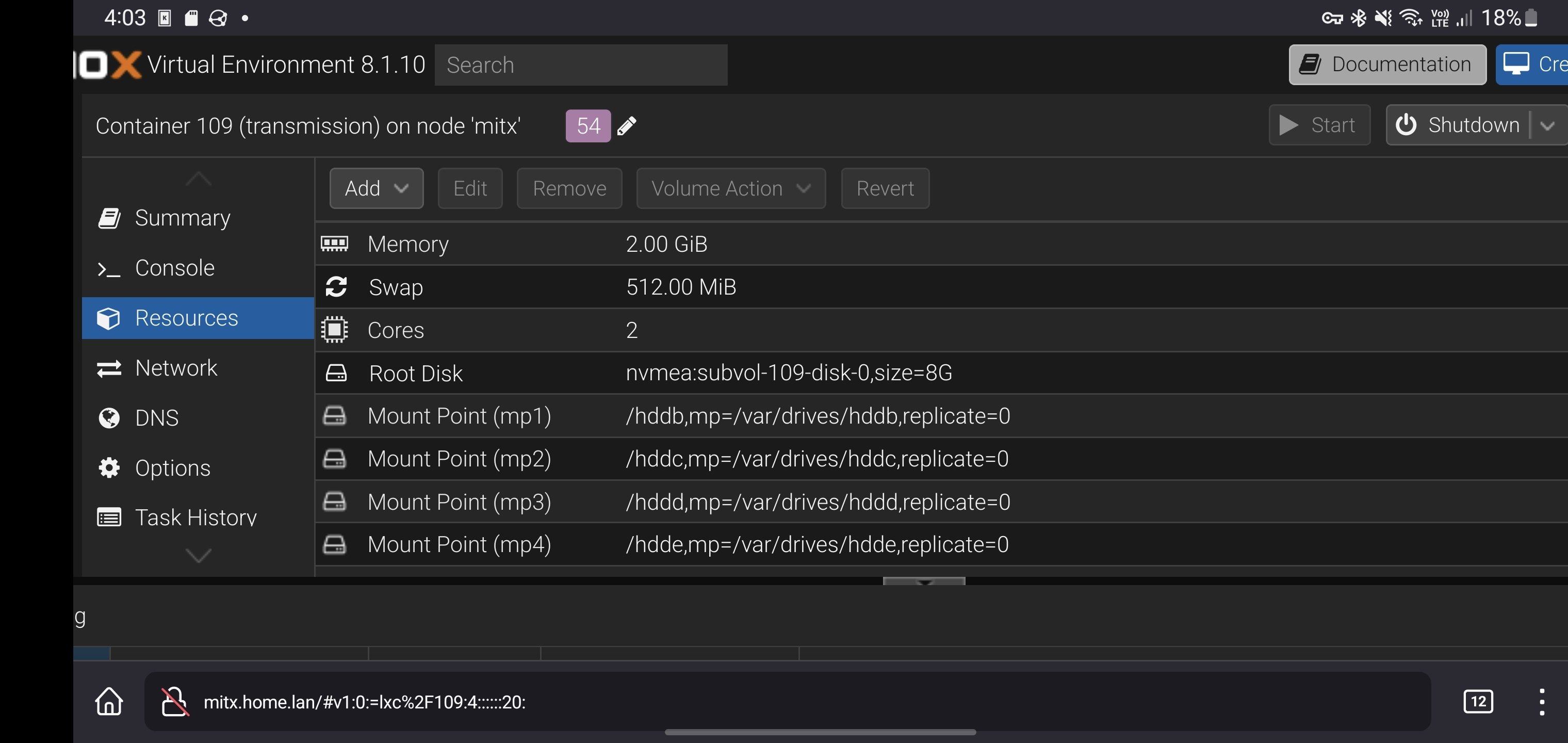

You could probably run OMV in an LXC and skip the overheads of a VM entirely. LXC are containers, you can just edit the config files for the containers on the host Proxmox and pass drives right through.

Your containers will need to be privileged, you can also clone a container and make it privileged if you have something setup already as unprivileged!

Yeah there is a workaround for using bind-mounts in Proxmox VMs: https://gist.github.com/Drallas/7e4a6f6f36610eeb0bbb5d011c8ca0be

If you wanted, and your drives are mounted to the Proxmox host (and not to a VM), try an LXC for the services you are running, if you require a VM then the above workaround would be recommended after backing up your data.

I’ve got my drives mounted in a container as shown here:

Basicboi config, but it’s quick and gets the job done.

I’d originally gone down the same route as you had with VMs and shares, but it’s was all too much after a while.

I’m almost rid of all my VMs, home assistant is currently the last package I’ve yet to migrate. Migrated my frigate to a docker container under nixos, tailscale exit node under nixos too while the vast majority of other packages are already in LXC.

Ahh the shouting from the rooftops wasn’t aimed at you, but the general group of people in similar threads. Lots of people shill tailscale as it’s a great service for nothing but there needs to be a level of caution with it too.

I’m quite new to the self hosting game myself, but services like tailscale which have so much insight / reach into our networks are something that in the end, should be self hosted.

If your using SMB locally between VMs maybe try proxmox, https//clan.lol/ is something I’m looking into to replace Proxmox down the line. I share bind-mounts currently between multiple LXC from the host Proxmox OS, configuration is pretty easy, and there are lots of tutorials online for getting started.

I still use it, the service is very handy (and passes the wife test for ease of use)

Probably some tinfoil hat level of paranoia, but it’s one of those situations where you aren’t in control of a major component of your network.

Tailscale is great, but it’s not something that should be shouted from the rooftops.

I use tailscale with nginx / pihole for my home services BUT there will be a point where the “free” tier of their service will be gutted / monetized and your once so free, private service won’t be so free.

Tailscale are SAAS (software as a service), once their venture capital funds look like their running dry, the funds will be coming from your data, limiting the service with a push to subscription models or a combination.

Nebula is one such alternative, headscale is another. Wire guard (which tailscale is based on) again is another.

I just installed xpipe and found i was habitually double clicking, found after I had a good 3 + terminal sessions running I’d best find out why.

Love my old Garmin vivoactive 2? Screen looks like shit but it’s readable in any setting. It’s pretty basic, use it for cycling as it talks to my chest-strap heart rate monitor.

I’ve got a pixel watch too, it’s a typical 1st gen product. Half baked but gets the job done, battery is an all-day for me (no Sim model). It’s got some scratches and blemishes all over the face, see how long it kicks around for.

Discord, Spotify and other electron applications will work fine in a browser. Rather than installing packages that are causing you issues just run them in Firefox.

It’s not a hardware issue but a combination of software issues.

Discord is trash, had issues with a KBDFans product, something as simple as a search of a forum would have given me the solution. I had to talk to a human to get the required information. They sent me a link to a firmware to download and all was good.

If I was able to search a forum it would have been a 2 minute job, but I wasted someone else’s time, on the other side of the globe.

Gallagher were great at that, rubbish solution for “teaching” staff about phishing which would infuriate all staff caught in the net. Would come from internal email addresses too which, if one person’s email / credentials are compromised they’ve got bigger fish to fry.

I mean it’s not the worst. Is it still https? Or are they serving plain ol http? My internal services (at home) are mostly https, but the certs are self signed so browsers will flag them as “insecure”.

Firefox is fine on mobile in my eyes.

At least the Android version, even on my 5 year old Exynos phone it does what I need / want from a browser. Allows (some) extensions, lets me zoom wherever I want to on any page, has a reader mode and is snappy enough on old hardware.

Chrome tries to be / do far too much for me, just fuck off and let me browse the web. I do like the dynamic colours that Chrome on mobile uses on different webpages, is hot.

However Chrome gives me dirty Microsoft vibes, and it’s pretty hard to shake that stank.

If your on iOS welcome to the walled garden. Hope you live in the EU.

I’ve got 3 cameras running on a vlan, with no access to the internet.

Frigate / Home Assistant + tail scale (want to move away from this service) let me see my cameras remotely, receive notifications from events and even look at events / stills on my watch.

I have some cheap 5mp Reolink camseras, not the best for frigate but get the job done.

I’ve started using Geddit, a 3rd party app that doesn’t use the Reddit API. And it’s still better than the app they develop in-house.

I rarely visit Reddit, but when searching for something niche there always seems to be a few threads over there sadly.

But into our religion and follow our rules now, so you can go to the happy cloud place later.

They better stay away from .mom domains