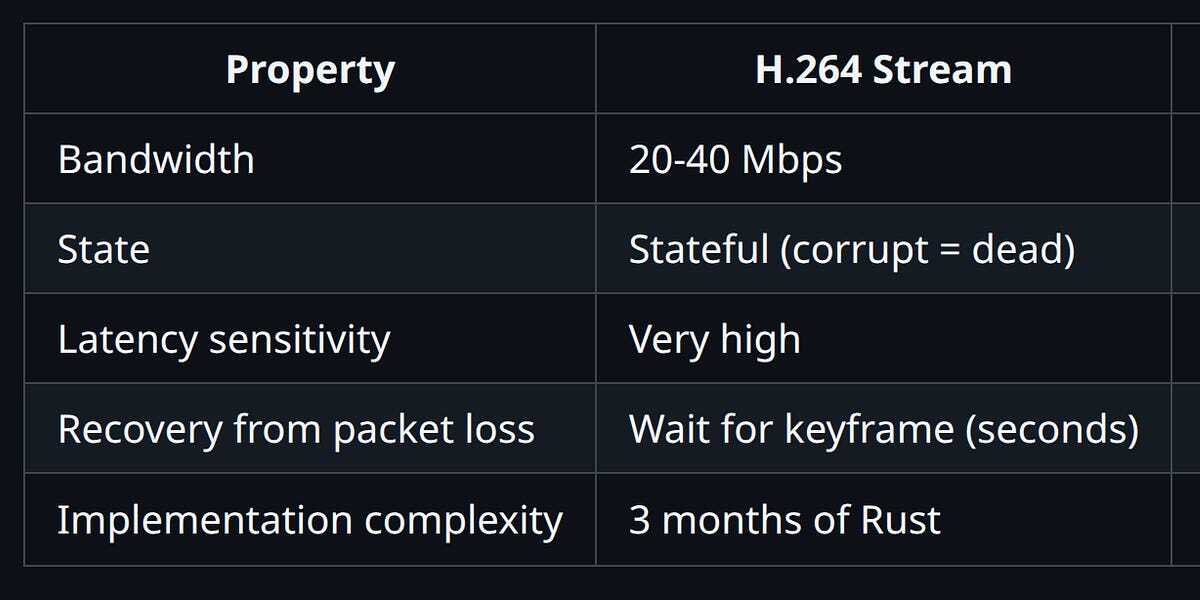

hardware-accelerated, WebCodecs-powered, 60fps H.264 streaming pipeline over WebSockets and replaced it with grim | curl when the WiFi got a bit sketchy.

I think they took https://justuse.org/curl/ a bit too serious.

We’re building Helix, an AI platform where autonomous coding agents work in cloud sandboxes. Users need to watch their AI assistants work. Think “screen share, but the thing being shared is a robot writing code.”

Oh, they are all about useless tech. Why would you need to watch an agent code at 1080P 60fps using 40Mbps?

Thanks, I was confused about why the helix editor might need screen sharing. Haha.

I was thinking the mattress company. Totally different vibes if they are needing to send video…

I’ve seen some very excited sponsor spots for them on YouTube. CDNs often make ads load faster than videos, so who knows what kind of innovations they could be financing. All of them perfectly privacy preserving, of course…

Yup, crazy. I record all my coding screencasts at 15 fps and it looks fine while the video file is tiny.

This sounded unhinged so I just had to check the article to confirm…

Yep, another AI startup.The fact they chose moonlight as their streaming protocol is proof they vibe coded the entire idea lmao. Moonlight/Sunshine are reverse engineered implementations of Nvidia’s proprietary GameStream product, designed for low latency streaming of game video/input on a typical home network. No competent engineer would choose that for this kind of application.

Laundering VC money for this. 😂

You are making it so people need to live watch AIs coding? This is insanity. This bubble is gonna hurt.

Uh, I’m pretty damn sure I have seen an office with hundreds of people, all connected remotely to workstations, on enterprise network, without any of the problems they are talking about. I’ve worked remotely from a coffee shop Wifi without any lag or issues. What the hell are they going on about? Have they never heard about VNC or RDP?

But our WebSocket streaming layer sits on top of the Moonlight protocol

Oh. I mean, I’m sitting on my own Wifi, one wall between me with a laptop (it is 10 years old, though) and my computer running Sunshite/Moonlight stream, and I run into issues pretty often even on 30FPS stream. It’s made for super low-latency game streaming, that’s expected. It’s extremely wrong tool for the job.

We’re building Helix, an AI platform where autonomous coding agents…

Oh. So that’s why.

Lol.

WebSocket seems like a completely wrong abstraction for streaming video…

But our WebSocket streaming layer sits on top of the Moonlight protocol, which is reverse-engineered from NVIDIA GameStream.

Mf? The GameStream protocol is designed to be ultra low latency because it’s made for Game Streaming, you do not need ultra low latency streaming to watch your agents typing, WTF?

They’re gonna be “working” on a desktop, why the hell didn’t you look into VNC instead? RDP??

You know, protocols with built-in compression and other techniques to reduce their bandwidth usage? Hello?? The fuck are you doing???deleted by creator

That makes too much sense and you can’t shoehorn AI for that sweet sweet VC money.

deleted by creator

I don’t understand why they bother with the “modern” method if the fallback works so well and is much simpler and cheaper.

JPEG method tops out at 5-10fps.

Modern method is better if network can keep up.

Don’t need high fps to watch an ai type.

Have you ever told an engineer not to build something overdesigned and fun to do?

From what I read, the modern solution has smooth 60 fps, compared to 2-10 FPS with the JPEG method. Granted, that probably also factors in low network speeds, but I’d imagine you may hit a framerate cap lower than 60 when just spamming JPEGs.

you don’t need 60 fps to read text? All you need is to stream the text directly?

When the unga bunga solution works better than the modern one

It’s what the LLM suggested.

They didn’t explicitly say but it sounds like the JPEG solution can’t put out a substantial FPS. If you start to do fancier stuff like sending partial screenshots or deltas only then you get the same issues as H264 (you miss a keyframe and things start to degrade). Also if you try and put out 30 JPEGs per second you could start to get TCP queuing (i.e. can’t see screenshot 31 until screenshot 30 is complete). UDP might have made this into a full replacement but as they said sometimes it’s blocked.

The modern method is more efficient if your network is reliable.

Modern: I-frame (screenshot) then a bunch of P-frames (send only what changed). If a frame gets lost, the following frames aren’t really usable, frozen until next I-frame Old: Send only full screenshots. Each frame has all the data, so losing one doesn’t matter.

I’m sorry, it seems to me that your first statement and supporting paragraph are taking the opposite position.

Oops. I meant the modern method is better if network is reliable. Not unreliable

Gotcha. That probably is true.

“we suck at coding so we did a funny but shitty workaround look at us we are amazing woohoo buy our vaporware”

UDP — Blocked. Deprioritized. Dropped. “Security risk.”

It’s this actually a thing? I’ve never seen any corporate network that blocks UDP. HTTP/3 will even rely on it.

Yes. Quic and other protocols are too new and don’t have a ton of support in firewall and inspection tools that are used by said corpos. It’s even required in the DISA STIG requirements to disable quic at the browser level.

Yes. My high school used to do this. UDP blocked except for DNS to some specific servers, and probably some other needed things.

This seems like a silly workaround at first but it’s really not. If the network is unreliable, you can’t really use normal video streaming, you need to send full screenshots. Probably still a better idea to use only I-frames than a bunch of JPEGs but whatever.

But they did make some very silly mistakes. Par for the course of an AI coding company I guess.

- WTF are you doing with 40mbps. Tone it down to like 8.

- If the network is reliable but slow, just reduce bitrate and resolution. Don’t use JPEGs unless packet loss is the problem.

- WTF are you doing using a whole game streaming server for? It’s meant for LAN, with minimal latency. Just capture the screen and encode it, send via WebSockets. Moonlight is completely unnecessary.

- Only keep the latest frames on the server. Don’t try to send them all immediately or it’ll fall behind. Wait for the client to finish receiving before sending another one. This way it won’t lag behind increasingly. This should have been extremely obvious.

- H264 is so 2003, ask the client if it supports AV1 or HEVC then use that, more data for free.

- Use WebTransport when available, it’s basically made for live streaming

- Why are you running a screenshot tool in terminal then grabbing the jpg… Unnecessary file overhead & dependency

I probably missed some but even for an AI company this is really bad

Yeah… I mean they should have just copied whatever video conferencing platforms do because they all work fine behind corporate proxies and they also don’t suffer from this “increasing delay” problem.

I haven’t actually looked into what they do but presumably it’s something like webrtc with a fallback to HLS with closed loop feedback about the delay.

Though in fairness it doesn’t sound like “watching an AI agent” is the most critical thing and mjpeg is surprisingly decent.

The video conferencing platform my work uses works well because it’s a large well-known platform and they punched holes for it into the firewall and the vpn. Not really something a service provider can just replicate.

I’m commenting on this because I want to read/discuss it later. I can’t seem to save this post

Edit: Ok now that the Xmas hoopla is over and I have free time, I read this. What even was the point lol

deleted by creator