Ugh. Don’t get me started.

Most people don’t understand that the only thing it does is ‘put words together that usually go together’. It doesn’t know if something is right or wrong, just if it ‘sounds right’.

Now, if you throw in enough data, it’ll kinda sorta make sense with what it writes. But as soon as you try to verify the things it writes, it falls apart.

I once asked it to write a small article with a bit of history about my city and five interesting things to visit. In the history bit, it confused two people with similar names who lived 200 years apart. In the ‘things to visit’, it listed two museums by name that are hundreds of miles away. It invented another museum that does not exist. It also happily tells you to visit our Olympic stadium. While we do have a stadium, I can assure you we never hosted the Olympics. I’d remember that, as i’m older than said stadium.

The scary bit is: what it wrote was lovely. If you read it, you’d want to visit for sure. You’d have no clue that it was wholly wrong, because it sounds so confident.

AI has its uses. I’ve used it to rewrite a text that I already had and it does fine with tasks like that. Because you give it the correct info to work with.

Use the tool appropriately and it’s handy. Use it inappropriately and it’s a fucking menace to society.

I gave it a math problem to illustrate this and it got it wrong

If it can’t do that imagine adding nuance

Well, math is not really a language problem, so it’s understandable LLMs struggle with it more.

But it means it’s not “thinking” as the public perceives ai

Hmm, yeah, AI never really did think. I can’t argue with that.

It’s really strange now if I mentally zoom out a bit, that we have machines that are better at languange based reasoning than logic based (like math or coding).

deleted by creator

Ymmv i guess. I’ve given it many difficult calculus problems to help me through and it went well

I know this is off topic, but every time i see you comment of a thread all i can see is the pepsi logo (i use the sync app for reference)

You know, just for you: I just changed it to the Coca Cola santa :D

Spreading the holly day spirit

We are all dutch on this blessed day

We are all gekoloniseerd

Voyager doesn’t show user PFPs at all. :/

Wait, when did you do this? I just tried this for my town and researched each aspect to confirm myself. It was all correct. It talked about the natives that once lived here, how the land was taken by Mexico, then granted to some dude in the 1800s. The local attractions were spot on and things I’ve never heard of. I’m…I’m actually shocked and I just learned a bunch of actual history I had no idea of in my town 🤯

I did that test late last year, and repeated it with another town this summer to see if it had improved. Granted, it made less mistakes - but still very annoying ones. Like placing a tourist info at a completely incorrect, non-existent address.

I assume your result also depends a bit on what town you try. I doubt it has really been trained with information pertaining to a city of 160.000 inhabitants in the Netherlands. It should do better with the US I’d imagine.

The problem is it doesn’t tell you it has knowledge gaps like that. Instead, it chooses to be confidently incorrect.

Only 85k pop here, but yeah. I imagine it’s half YMMV, half straight up luck that the model doesn’t hallucinate shit.

Did you chatgpt this title?

“Did you ChatGPT it?”

I wondered what language this would be an unintended insult in.

Then I chuckled when I ironically realized, it’s offensive in English, lmao.

Did you cat I farted it?

GPTs natural language processing is extremely helpful for simple questions that have historically been difficult to Google because they aren’t a concise concept.

The type of thing that is easy to ask but hard to create a search query for like tip of my tongue questions.

Google used to be amazing at this. You could literally search “who dat guy dat paint dem melty clocks” and get the right answer immediately.

I mean tbf you can still search “who DAT guy” and it will give you Salvador Dali in one of those boxes that show up before the search results.

The type of question where you don’t even know what you don’t know.

Last night, we tried to use chatGPT to identify a book that my wife remembers from her childhood.

It didn’t find the book, but instead gave us a title for a theoretical book that could be written that would match her description.

At least it said if it exists, instead of telling you when it was written (hallucinating)

Maybe it’s trying to motivate me to become a writer.

Maybe it’s trying to motivate me to become a writer.

Same happens every time I’ve tried to use it for search. Will be radioactive for this type of thing until someone figures that out. Quite frustrating, if they spent as much time on determining the difference between when a user wants objective information with citations as they do determining if the response breaks content guidelines, we might actually have something useful. Instead, we get AI slop.

And then google to confirm the gpt answer isn’t total nonsense

I’ve had people tell me “Of course, I’ll verify the info if it’s important”, which implies that if the question isn’t important, they’ll just accept whatever ChatGPT gives them. They don’t care whether the answer is correct or not; they just want an answer.

That is a valid tactic for programming or how-to questions, provided you know not to unthinkingly drink bleach if it says to.

Well yeah. I’m not gonna verify how many butts it takes to swarm mount everest, because that’s not worth my time. The robot’s answer is close enough to satisfy my curiosity.

For the curious, I got two responses with different calculations and different answers as a result. So it could take anywhere from 1.5 to 7.5 billion butts to swarm mount everest. Again, I’m not checking the math because I got the answer I wanted.

Both suck now.

I have to say, look it up online and verify your sources.

How long until ChatGPT starts responding “It’s been generally agreed that the answer to your question is to just ask ChatGPT”?

I’m somewhat surprised that ChatGPT has never replied with “just Google it, bruh!” considering how often that answer appears in its data set.

deleted by creator

Sadly, partial truths are an improvement over some sources these days.

Have they? Don’t think I’ve heard that once and I work with people who use chat gpt themselves

I’m with you. Never heard that. Never.

This is entirely Google’s fault.

Google intentionally made search worse, but even if they want to make it better again, there’s very little they can do. The web itself is extremely low signal:noise, and it’s almost impossible to write an algorithm that lets the signal shine through (while also giving any search results back)

It would still be better if quality search (not extracting more and more money in the short term) was their goal.

Just duck it bro. (Add !chat to your query or use ai assistant in results)

ChatGPT is a tool under development and it will definitely improve in the long term. There is no reason to shit on it like that.

Instead, focus on the real problems: AI not being open-source, AI being under the control of a few monopolies, and there being little to none regulations that ensure it develops in a healthy direction.

AI is pretty over-rated but the Anti-AI forces way overblow the problems associated with AI.

it will definitely improve in the long term.

Citation needed

There is no reason to shit on it like that.

Right now there is, because of how wrong it and other AIs can be, with the average person using the first answer as correct without double checking

Reject proprietary LLMs, tell people to “just llama it”

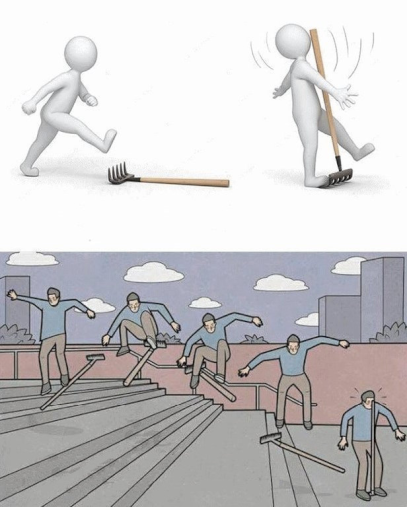

Top is proprietary llms vs bottom self hosted llms. Bothe end with you getting smacked in the face but one looks far cooler or smarter to do, while the other one is streamlined web app that gets you there in one step.

I wonder where people can go. Wikipedia maybe. ChatGPT is better than google for answering most questions where getting the answer wrong won’t have catastrophic consequences. It is also a good place to get started in researching something. Unfortunately, most people don’t know how to assess the potential problems. Those people will also have trouble if they try googling the answer, as they will choose some biased information source if it’s a controversial topic, usually picking a source that matches their leaning. There aren’t too many great sources of information on the internet anymore, it’s all tainted by partisans or locked behind pay-walls. Even if you could get a free source for studies, many are weighted to favor whatever result the researcher wanted. It’s a pretty bleak world out there for good information.

Google isn’t a search engine any more. It stopped being that some years ago.

Now it’s more accurately described as a shitty content feed that can be weakly filtered using key words.