The funny thing is that the YT comment section was almost immediately brigaded by Elmo suckups that complained the tester was using Autopilot and not the latest version of FSD, completely forgoing the point that the LiDar vehicle stopped despite any automation systems being turned on.

This was a test of the emergency braking systems of both cars in as close to real world scenarios as possible. Whether you run over a child in heavy rain or dense fog should not be dependent on whether the driver remembered to turn certain safety systems on prior to departure.

It’s a massive fail for Tesla, and it is the closest scientific proof that the Elmo is a rich daddy boy with shit for brains. Once you have that level of money and leverage, you simply can make the most stupid decisions ever, and keep falling upwards. Meanwhile real people are dying almost weekly in his vehicles, and reporters and news organisations are scared shitless to report on them because he can cause lots of pain with his imaginary cash. Not to mention no govt agency will investigate properly because he’s got root access to that too. All those deaths are entirely preventable.

Keep burning them, keep protesting at the dealerships. This truly could be the first billionaire we bring down. Watch him activate the OTA kill switch in every Tesla before they go under too. He’s thát kind of baby. Ironically that would save a lot of lives.

Lidar is clearly superior and Musk removed it because it was more expensive. The obvious conclusion is any self driving car should be made to use lidar and not simple vision based on cameras. Well at least not solely vision. Musk’s sycophants are just going to keep providing excuses and “whatabout’isms”. But there really is no doubt.

The big headline is understandably that it crashes into a fake painted wall like a cartoon, but that’s not something that most drivers are likely to encounter on the road. The other two comparisons where lidar succeeded and cameras failed were the fog and rain tests, where the Tesla ran over a mannequin that was concealed from standard optical cameras by extreme weather conditions. Human eyes are obviously susceptible to the same conditions, but if the option is there, why not do better than human eyes?

Human eyes are way better at this than any camera based self driving system. No self driving system is anywhere close to driving in Swedish winter with bad weather and no sun, yet us Swedes do it routinely by the millions every day.

Yep, winter will be the death of 100% self driving cars, we can “filter out” snowflakes easily, computers can’t.

My Volvo (and I mean, if one brand makes cars made for winter, it’s them) ends up turning adaptive cruise control off in snowstorms because the sensors get completely blocked by snow. Elon never had to drive in conditions where the whole front of your car ends up looking like a snowbank and it shows. Hell you might need to stop by the side of the road to clear your lights in order to continue driving! Try to make a South African living in Texas understand that!

Not only that. As a general rule road markings are hidden beneath the snow, and often signs will be covered as well. You have to make an educated guess what they say. E.g. speed limit is based on contextual clues like road width, distance to treeline etc).

Really any technology that relies on signs and markings being visible will fail. There is little indication of where the road is. Often a fork in the road is not apparent because the plow has pushed up a snow bank that you must push through (but be careful not to get stuck in it if it’s too dense).

Combine this with heavy snow in the wind, road salt that picks up dirt that sticks to sensors, fog, and other cars that overtake you while spraying snow across your windshield. It’s honestly crazy that humans manage so well in these conditions.

That’s interesting. I’ve never had any problems with adaptive cruise in the winter. I’m pretty sure that my V90CC has a heated radar module.

Mine is a P3 so maybe the newer generation fixed that!

Of course it does snow in Texas. Famously even.

Elon’s problem is he’s just a self-absorbed dick, most people wouldn’t have trouble imagining adverse weather conditions, even if they haven’t personally experienced them.

Average snowfall of 0.1 inches a year in the state, the northern part of the state gets less than 2 inches on average, the place that gets the most snow is Amarillo at 17 inches a year on average.

Last month we got 31 inches over three days over here, I’ve lived in a city where 200 inches a year is normal.

Texas doesn’t know actual winter driving.

Human eyes are much better at picking up shapes from complex visual clutter than computers. Not to mention that human eyes have infinitely better dynamic range than cameras (every time you go into or out of a tunnel the camera is essentially blind for a couple of seconds until it readjusts), both mean that while you absolutely do need the cameras, you can’t just rely on them and say “oh well humans only use their eyes”, because the cameras and the computers are nowhere near human level capable.

I love the justification for only using cameras is “humans do it”. Like, are we not supposed to use any technology that humans can’t replicate? Maybe we shouldn’t fly planes because humans can’t fly. It’s such a dumb reason.

Tesla users with low empathy: who cares?

Seriously though, why ditch lidar?

To save Elmo a few bucks.

Long story short is Musk is a greedy billionaire that likes to think he’s an engineer and he clearly isn’t.

Excuse me. He’s also a top ranked PoE player that doesn’t know how open his inventory.

Whilst I agree on the wall, fog and rain are not extreme weather conditions. I’d rather he’d used a level of rain that was less intense. However, the fact is lidar still worked even though it was not clear it was going to.

Even worse, you could actually easily see the mannequin behind the rain curtain.

They’ve also been killing motorcyclists in Canada, for the same reason.

Thanks for sharing. That was a very informative and succinct video

The worst part about all of this, is Tesla has backed themselves into a corner with their aggressive marketing. If additional sensors are required it will cost them billions in retrofitting all the teslas it’s already sold. Partially believe that’s why Elon bought Donald.

It’s already going to cost them what I imagine is billions in upgrades.

On the last earnings call Elon finally admitted that HW3 will probably need to be upgraded for people who bought/buy FSD.

That’s millions of cars, and there’s been some rulings that the upgrade includes people who decided to subscribe to fsd instead of purchase.

Lawsuits aside from it never being complete, the moment whatever their latest tech is that can, even if it is somehow all cameras, is going to lead to millions of cars needing upgrades.

However, if they truly solve it, that’s probably a drop in the bucket compared to the profits itd generate even if they have to add lidar or refund thousands of dollars per vehicle.

I don’t want him driving my car either

I’d say that the quest party is that Mark Rober said he’ll probably buy another Tesla.

Let that sink in a minute.

You still think that musk’s company will deliver what musk promised. How the fuck is that possible?

No that’s why I said I think it’s why he bought a president…

Just paint a road on the back of every truck trailer.

the crash test in question starts at 15:25 in the yt video embedded in the article. the wall is some kind of styrofoam construction

Pre-cut with comedy edges as well. But the methodology seemed valid as another poster said, giving cars the same limited eyesight as us seems like under engineering for safety.

This is already the top article in the community.

Life imitates art.

As much as I like Mark, He’s got some explaining to do.

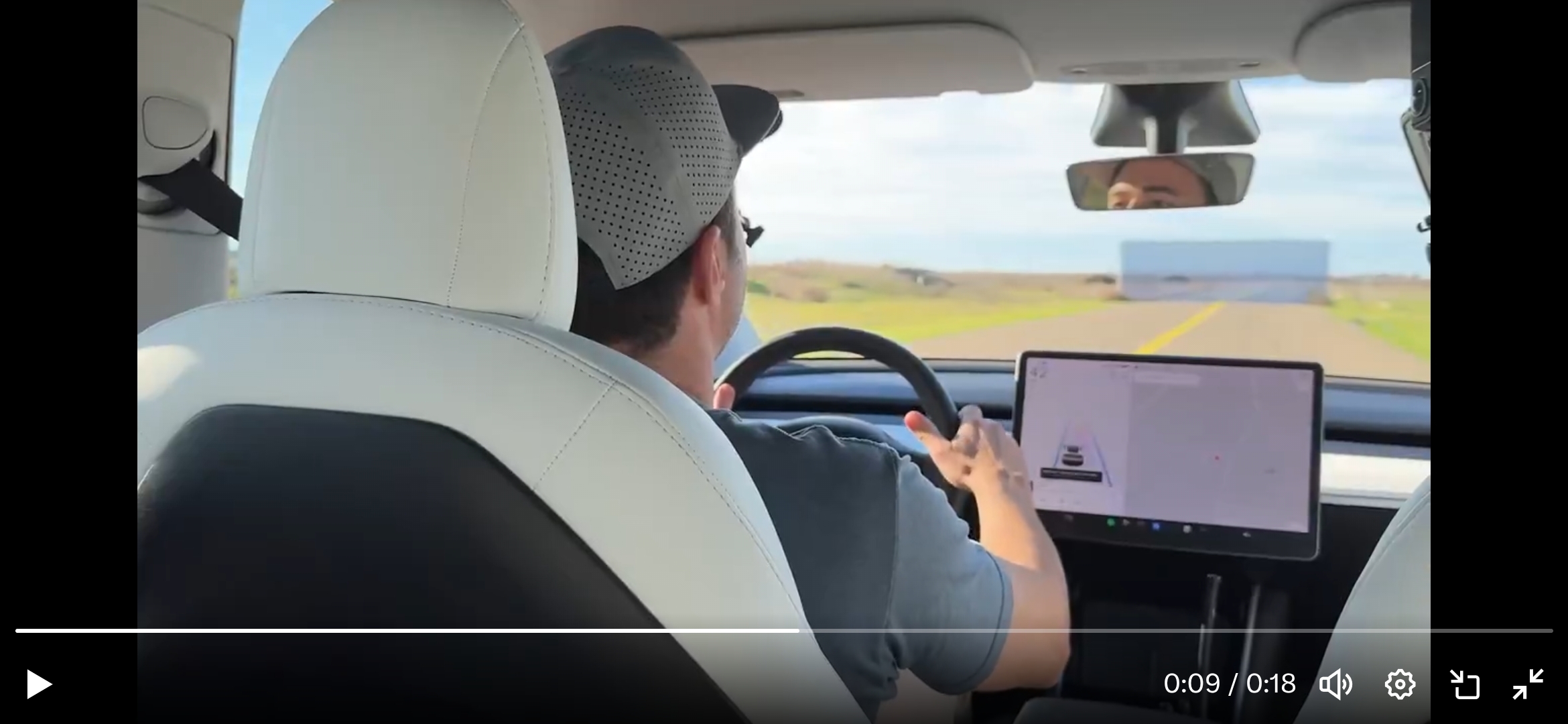

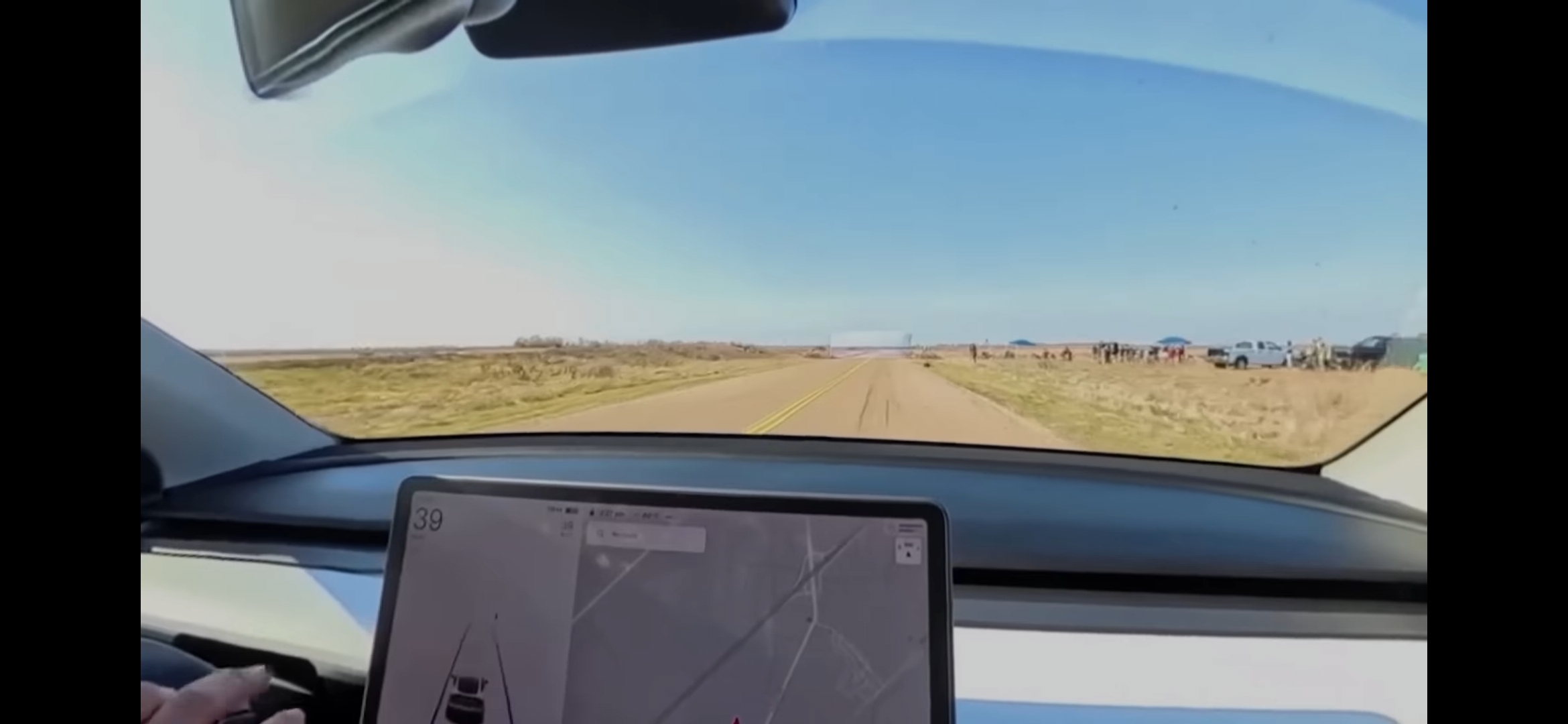

At 15:42 the center console is shown, and autopilot is disengaged before impact. It was also engaged at 39mph during the youtube cut, and he struck the wall at 42mph. (ie the car accelerated into the wall)

Mark then posted the ‘raw footage’ on twitter. This also shows autopilot disengage before impact, but shows it was engaged at 42mph. This was a seprate take.

/edit;

Youtube, the first frames showing Autopilot being enabled: 39mph

Twitter, the first frames showing autopilot being enabled: 42mph

Mark then posted the ‘raw footage’ on twitter. This also shows autopilot disengage before impact, but shows it was engaged at 42mph. This was a seprate take.

No. That’s by design. The “autopilot” is made to disengage when any likely collision is about to occur to try to reduce the likelihood of someone finding them liable for their system being unsafe.

Not saying you’re wrong (because I’ve always found it suspicious how Tesla always seems to report that autopilot is disengaged for fatal accidents) but there’s probably some people asking themselves “how could it detect the wall to disengage itself?”.

The image on the wall has a perspective baked into it so it will look right from a certain position. A distance from which the lines of the real road match perfectly with the lines of the road on the wall. As you get closer than this distance the illusion will start to break down. The object tracking software will say “There are things moving in ways I can’t predict. Something is wrong here. I give up. Hand control to driver”.

Autopilot disengaged.

(And it only noticed a fraction of a second before hitting it, yet Mark is very conscious of it. He’s screaming. )

Sidenote: the same is true as you move further from the wall than the ideal distance. The illusion will break down in that way too. However, the effect is far more subtle when you’re too far away. After all, the wall is just a tiny bit of your view when you’re a long way away, but it’s your whole view when you’re just about to hit it.

If you watch the footage it’s clearly the same take.

From the twitter footage:

This is from the first couple frames showing that Autopilot is enabled, just as the blue lines appeared on screen: 42mph displayed on the center console.

And another a couple seconds later:

And another a couple seconds later:

And from the youtube footage:

Again, from the first couple frames as Autopilot is enabled, just as the blue lines appear: 39mph displayed on the center console.

Here’s more from youtube, taken several seconds apart:

They are very very similar, but they do appear to be two different takes.

He stated in the video he tapped the brakes. Doesn’t that disengage auto pilot?

It would, but he explicitly says ‘without even a slight tap on the breaks’ in the youtube video.

Then:

Here is the raw footage of my Tesla going through the wall. Not sure why it disengages 17 frames before hitting the wall but my feet weren’t touching the brake or gas.

- Mark Rober

He did state that he hit the brakes. Just on the fog one, not the wall. 🤷

But the fact that FSD disengages 17 frames before the crash also implies the software, along with the car, crashed 😂 I’d love to see the logs. I imagine the software got real confused real quick.

This is likely what happened. The software hit an invalid state that defaulted to disengaging the autopilot feature. Likely hit an impossible state as the camera could no longer piece the “wall” parts of the road with the actual road as it got too close.

Likely an error condition occured that would happen in the same way if an object suddenly covered the camera while driving. It would be even more concerning if the autopilot DIDN’T disengage at that point.

The software likely disengaged because the wall was so close it could not function. It would be the same as if an object suddenly covered up the camera while you were driving. It would turn off the autopilot/FSD or whatever they call it. (At least I hope it would)

He explicitly states that he didn’t tap the brakes.

He said he tapped the brakes on one of the earlier non-wall runs.