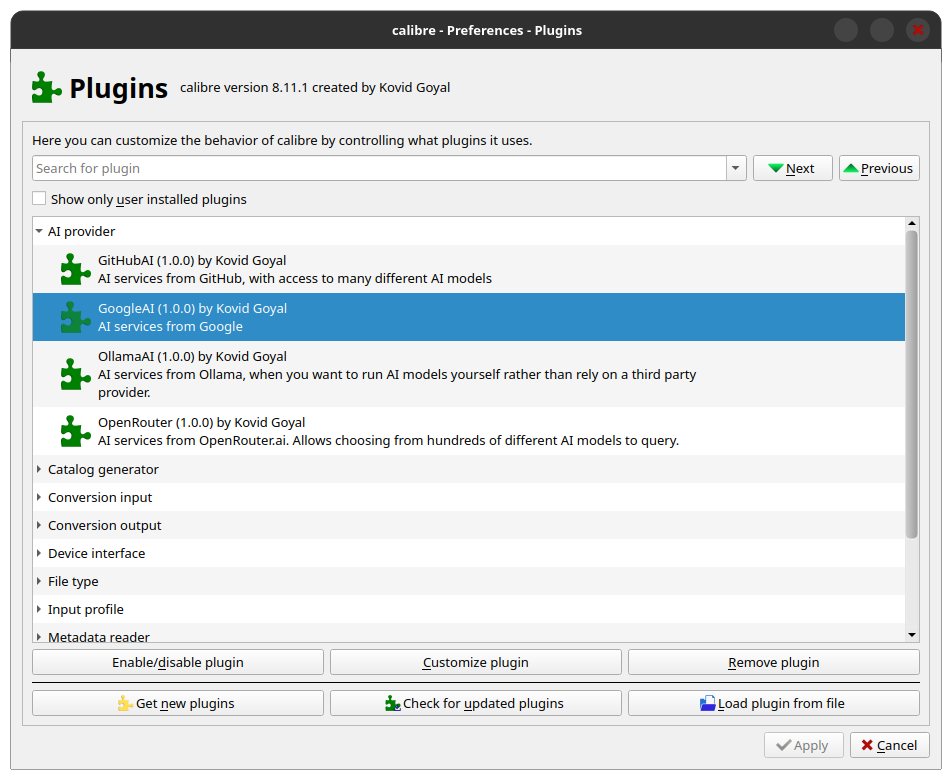

From their release notes:

[…] Note that this feature is completely optional and no AI related code is even loaded until you configure an AI provider.

I’m glad for that part.

Why the fuck would i want that?

My exact reaction to everything corpo llms push.

Usually im thinking, huh, I actually have a brain, so I dont need a single thing theyre offering (which is really nothing that hasn’t been around for 15 years, chatbots).

I don’t really see a use case for it on calibre. Maybe others are more creative than I am and can tell me what they would use it for.

As far as I see it on the release notes it is completely optional and nothing gets loaded until the Provider is defined and activated so it’s peachy for me.

Well, have you ever read something and went “what the hell is this?”, “I don’t get this”, “what is an abubemaneton?”. Well, now you’ll be able to quickly ask AI about it.

I would always prefer a dictionary or a Wikipedia over a fucking LLM.

And then the AI can give you some made up and incorrect answer. Hooray!

I remember having an Oxford Dictionary CD as a child (got it with the physical copy).

Unfortunately, it stopped working long ago (and I didn’t rip it), but while it did work, I had quite a lot of fun reading up on word-origins, synonyms/antonyms, pronunciations and whatnot.I’d honestly rather be able to connect something like that to Calibre (and other programs) with DBus, rather than use AI for a definition. And that was just a single CD (I can be sure, because I didn’t have a DVD reader).

So, perhaps some other use case?

QuickDic’s default databases are compiled from Wiktionary entries, and Wiktionary seems like the most reliable part of Wikipedia currently. Wonder then if that couldn’t be used also. On QuickDic, having all databases installed takes a bit over 1 GB, not much for desktop standards afaik.

AI is not only capable of definitions. In fact… You wouldn’t use it for that. But It’s terribly good at context. So it can interpret a whole phrase, or paragraph. Maybe calibre even passes the book metadata so it can infer characters, places and broader context.

Yeah, that won’t really be doable just by an extended dictionary.

I myself tend to use Google sometimes, to look for stuff like “one word for the phrase …” and most of the times the AI is the one giving the answer.

Sure, I guess, but I could just as easily ask chatgpt in the chatgpt app, I feel.

This just makes it faster, and convenient, you don’t have to get out of your book.

AI slop has no place in books.

What would it be doing if I enabled it?

And can it be configured to use local(ly installed) AI?

Probably comprehend sections of the text.

Regarding your 2nd question: Yes, you can e.g. use a local Ollama instance.

I thought it would be useful for filling and finding metadata, but I don’t think it can do that

fuck that shit!

I… kinda don’t care about this. It does nothing if not enabled and its super barebones as far as I can tell.

This is why I hate how everything is AI now.

Generative AI is absolute trash.

A fancy suped up search engine using AI to check files and answer questions about the files, whatever.

Its only being shoved in our faces to try to recoup the trillions they’ve lost, while using up finite resources so a 10 year old can make an ai image of a puppy.

Fucking hate it here. Going back to 1999.

So if it only lets the llm see highlighted text, whats the point of even adding it into calibre. It takes 0 extra seconds to paste that text into google or chatgpt or duck.ai or whatever

You know it doesn’t take 0 seconds. Record yourself. If you are studying or something (and need AI comments somehow), it’s a good feature.

Good to see some actual push back against the luddites of lemmy.

Then get out.