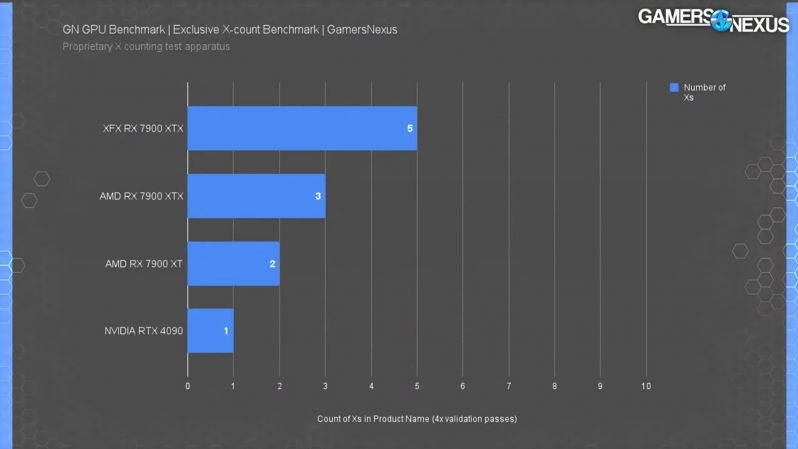

RX 7900 XTX is better because it has more x

The best thing about this is that it’s also on the x-axis.

“Thanks Steve.”

deleted by creator

X is deprecated. Use the RWayland 7900 WaylandTWayland instead.

That’s 50% more Xs ! You can beat this deal !

This bad boy can fit so many X’s in it

Out of all the marketing letters (E, G, I, R, T and X) X is definitely the betterest!

How many X are in XTX?

But what about the xXx_RX_7900_xXx?

xXx_RX_790042069_xXx

But X is bad, as proved by Elon Musk - so it should be the other way around.

That X is twice as much vram, which funny enough, is great for running ai models

My question is, is X better than XTX? XTX has more Xs, but X has only Xs. I think I need AI to solve this quandary.

I switched to duckduckgo before this bullshit, but this would 100% make me switch if I hadn’t already.

Who wants random ai gibberish to be the first thing they see?

If search engines don’t improve to address the AI problem, most of the Internet will be AI gibberish.

I think that ship has sailed

The internet as we knew it is doomed to be full of ai garbage. It’s a signal to noise ratio issue. It’s also part of the reason the fediverse and smaller moderated interconnected communities are so important: it keeps users more honest by making moderators more common and, if you want to, you can strictly moderate against AI generated content.

DuckDuckGo started showing AI results for me.

I think it uses the bing engine iirc.

Sure, but it’s trivial to turn it off. While you’re there, also turn off ads.

And you can use multiple models, which I find handy.

There is some stuff that AI, or rather LLM search, is useful for, at least the time being.

Sometimes you need some information that would require clicking through a lot of sources just to find one that has what you need. With DDG, I can ask the question to their four models*, using four different Firefox containers, copy and paste.

See how their answers align, and then identify keywords from their responses that help me craft a precise search query to identify the obscure primary source I need.

This is especially useful when you don’t know the subject that you’re searching about very well.

*ChatGPT, Claude, Llama, and Mixtral are the available models. Relatively recent versions, but you’ll have to check for yourself which ones.

Better than an Ad I guess? Not sure if my searches haven’t returned any AI stuff like this or if my brain is already ignoring them like ads.

The plan is to monetize the AI results with ads.

I’m not even sure how that works, but I don’t like it.

Sounds like the advice you’d get in the first three comments asking a question on Reddit.

I wonder where they trained the AI model to answer such a question lol.

Don’t forget the glue on the pizza

Its not artificial intelligence, its artificial idiocy

Nah. It’s real idiocy.

It’s all probability, what’s the most probable idiocy someone would answer?

Stochastic parrot

No, they’re All Interns.

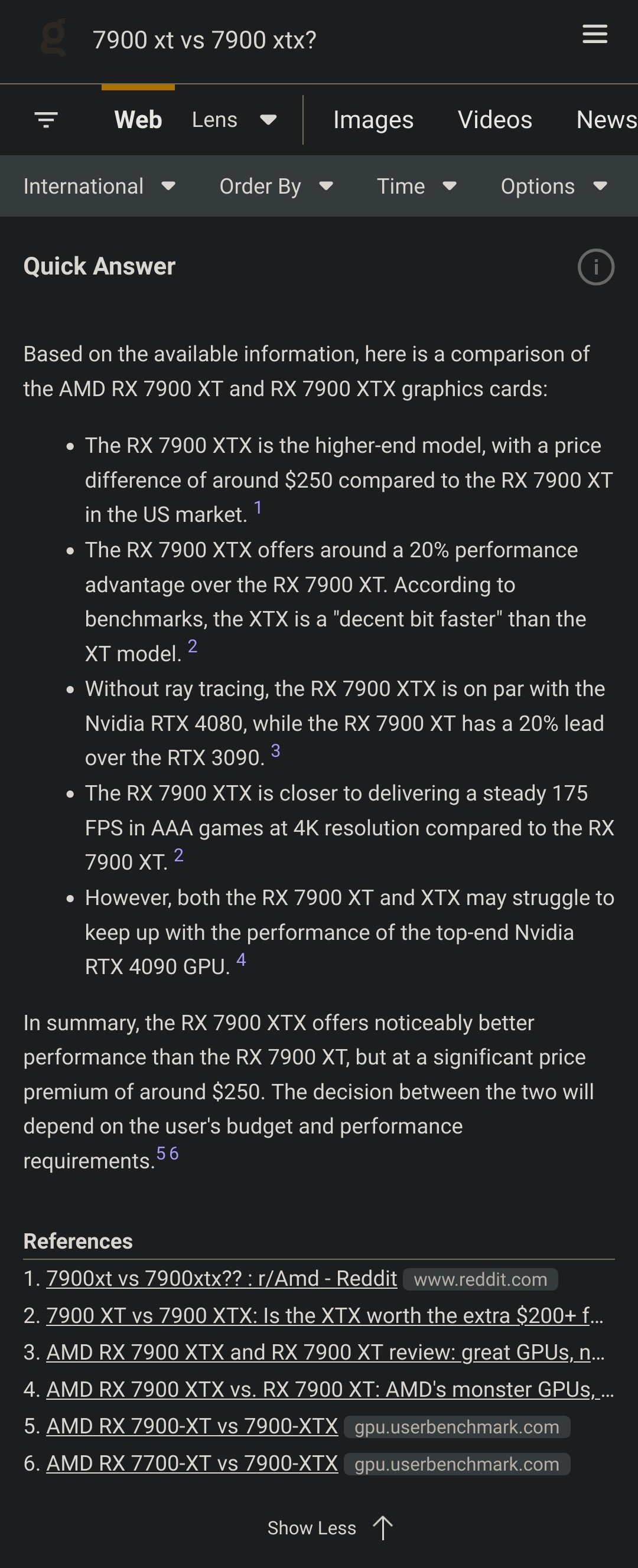

Here is what kagi delivers with the same prompt:

NB: quick answer is only generated when ending your search with a question mark

Problem is, you cannot trust it’s not hallucinating these stats

And even if it’s showing the correct number, you can’t be sure how trustworthy the source is.

This applies to any information though, it’s got nothing to do with LLMs specifically.

Not really, no. Sources of infornation gain a reputation as time goes on. So, even though you should still check with multiple sources, you can sort of know if a certain bit of information is likely to be correct or not.

On the other hand, LLM’s will quote different sources and sometimes it will only provide them if you ask it to. Even then it can hallucinate and quote a source that doesn’t actually exist, so there’s that as well.

At least it’s citing sources and you can check to make sure. And from my anecdotal evidence it has been pretty good so far. It also told me on some occasions that the queried information was not found in it’s sources instead of just making something up. But it’s not perfect for sure, it’s always better to do manual research but for a first impression and to find some entry points I’ve found it useful so far

The problem is that you need to check those sources today make sure it’s not just making up bullshit and at that point you didn’t gain anything from the genai

As I said the links provide some entry points for further research. It’s providing some use to me because I don’t need to check every search result. But to each their own and I understand the general scepticism of generative “AI”

If you don’t check everyone source. It might be just bullshitting you. There’s people who followed your approach and got into hot shit with their bosses and judges

There is absolutely value in something compiling sources for you to personally review. Anyone who cannot use AI efficiently is analogous to someone who can’t see the utility in a graphing calculator. It’s not magic, it’s a tool. And tools need to be used precisely, and for appropriate purposes.

My plumber fucks up I don’t blame his wrench. My lawyers don’t vet their case work, I blame them.

The sources are the same result of the search? Or at least the top results?

When I query an AI I always end with “provide sources and bibliography for your reply”. That seems to get better replies.

That being said, I can’t trust MKBHD is not hallucinating either.

Well, I’m sated.

7900 XTX; more powerful, therefore better.

7900 XT; cheaper, therefore better.

ChatGPT4o can do some impressive and useful things. Here, Im just sending it a mediocre photo of a product with no other context, I didnt type a question. First, its identifying the subject, a drink can. Then its identifying the language used. Then its assuming I want to know about the product so its translating the text without being asked, because it knows I only read english. Then its providing background and also explaining what tamarind is and how it tastes. This is enough for me to make a fully informed decision. Google translate would require me to type the text in, and then would only translate without giving other useful info.

It was delicious.

Google: ok so the AI in search results is a good thing, got it!

The search engine LLMs suck. I’m guessing they use very small models to save compute. ChatGPT 4o and Claude 3.5 are much better.

And good luck typing that in if you don’t know the alphabet it’s written in and can’t copy/paste it.

It goes without saying that this shit doesn’t really understand what’s outputting; it’s picking words together and parsing a grammatically coherent whole, with barely any regard to semantics (meaning).

It should not be trying to provide you info directly, it should be showing you where to find it. For example, linking this or this*.

To add injury in this case it isn’t even providing you info, it’s bossing you around. Typical Microsoft “don’t inform a user, tell it [yes, “it”] what it should be doing” mindset. Specially bad in this case because cost vs. benefit varies a fair bit depending on where you are, often there’s no single “right” answer.

*OP, check those two links, they might be useful for you.

LLMs don’t “understand” anything, and it’s unfortunate that we’ve taken to using language related to human thinking to talk about software. It’s all data processing and models.

Yup, 100% this. And there’s a crowd of muppets arguing “ackshyually wut u’re definishun of unrurrstandin/intellijanse?” or “but hyumans do…”, but come on - that’s bullshit, and more often than not sealioning.

Don’t get me wrong - model-based data processing is still useful in quite a few situations. But they’re only a fraction of what big tech pretends that LLMs are useful for.

Yeah, I’m far from anti-AI, but we’re just not anywhere close to where people think we are with it. And I’m pretty sick of corporate leadership saying “We need to make more use of AI” without knowing the difference between an LLM and a machine learning application, or having any idea *how" their company could make use of one of the technologies.

It really feels like one of those hammer in search of a nail things.

SearXNG only returns results search engines agree on. That removes ads and this bullshit

Am i guessing it right that the XTX uses 50% more energy for the 20% more power?

That’s generally how it goes at the very top of capacity yes.

In one word: factory-overclocked

I’m sorry but we’re going to have to send that to the English teachers to see if it’s really one word…

(on mobile, so sorry for any formatting weirdness)

English teachers will only give you an arbitrary, subjective answer about whether it’s a word - you want a linguist if you want an objective answer.

Since we’re dealing with two different “words” (roots) here, factory and overclocked, the first thing to look for is compound stress. Many compound words in English get initial stress: compare “blackbird” and “a black bird”.

This isn’t foolproof, however. For some speakers there are compounds that don’t get compound stress - some speakers say “paper towel” as expected, while others say “paper towel”, but it’s still a compound either way.

So how can we actually tell that paper towel is one word? See if the first member of the potential compound (the non-head) can be modified in any way.

For example, we know doghouse is a compound because in “a big doghouse” big can only refer to the house, and cannot refer to “the house of a big dog”. Similarly, blackboard must be one word because it can take what appear to be contradictory modifiers: " a green blackboard".

So, in the same way, paper towel and toilet paper are one word because “big paper towel” can’t mean “a towel made from big paper” and “pink toilet paper” can’t mean “paper for a pink toilet”. (Toilet paper also gets compound stress.)

Yet another way to test is by semantic drift (meaning shift). As mentioned earlier, blackboards don’t have to be black, so the meaning of the compound doesn’t perfectly correspond to the pieces of the word - instead, the fact that it’s a vertical board you write on in chalk is much more important to the meaning. This is because once the pieces combine to form a new word, that new word can start to shift away from the meaning of the pieces. Again, however this process takes time, so it’s not a perfect test.

So, back to the original question: is “factory-overclocked” one word?

Well, it doesn’t get compound stress, and for me I can still say things like “it’s home-factory-overclocked” to mean that it was overclocked in its home factory, so the first member can take modifiers. And, the whole thing still means what the pieces mean.

So, in my grammar, “factory-overclocked” is two words. But for some of you “home factory overclocked” may not be possible, which would indicate that it’s started to become one word for you. Everyone’s grammar is different, but we can still test for these categories.

If you instead mean by your question, “can factory and overclocked be combined with a hyphen?”, however, I can’t help you, because language-specific writing conventions are subjective and arbitrary, and not something that linguists usually care very much about.

And this is why I love places like Lemmy. An offhand joke turns into an actual grammar lesson. Thank you!

After VAR the ruling has been overturned.

You can do this with practically any versus question and get hilarious results

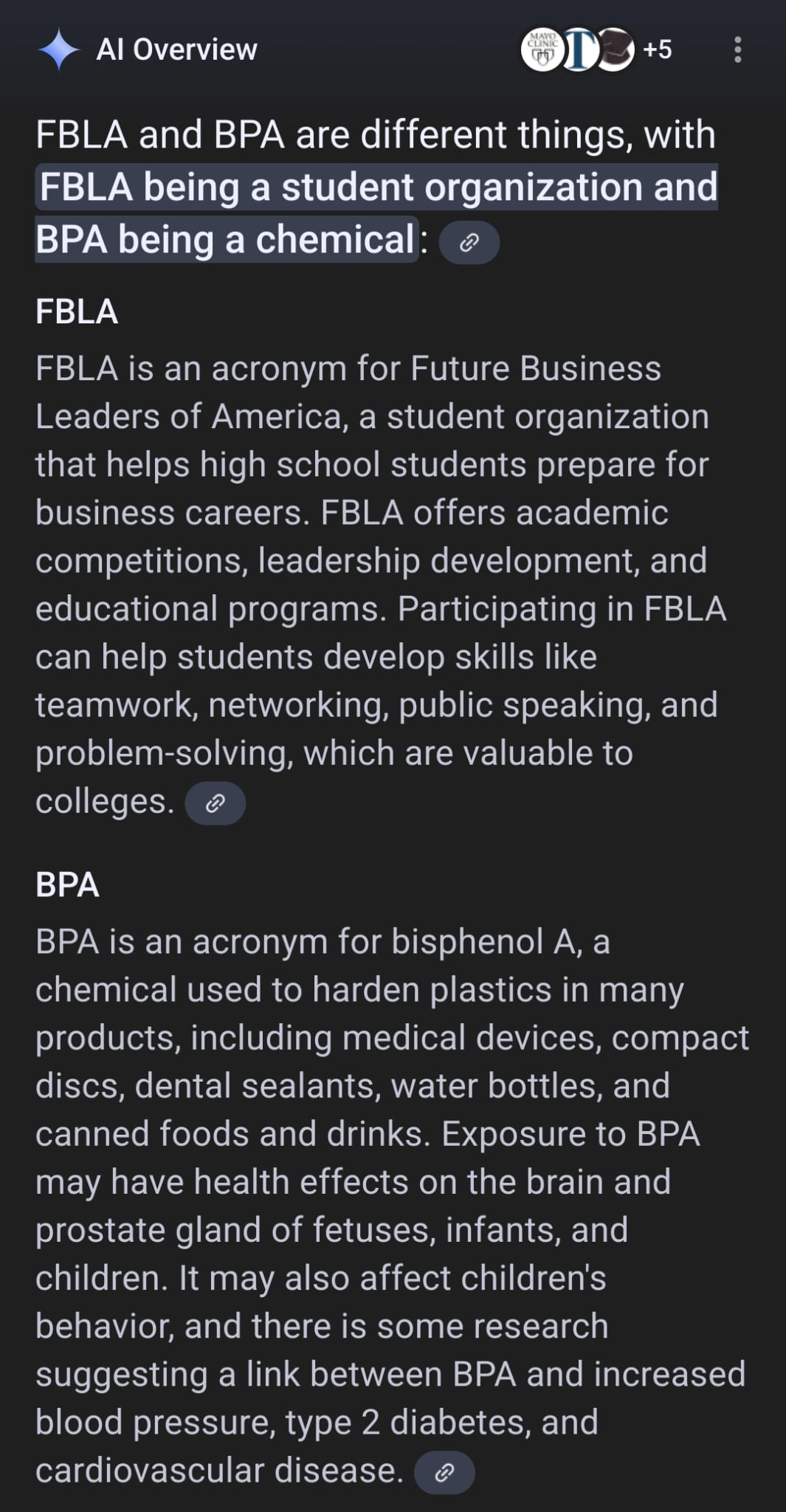

This doesn’t appear to be comparing them, though? Just explaining what two acronyms are?

Yeah I should have mentioned the context is FBLA, and Google partially fixed the prompt.

Original from a few weeks ago:

BPA is another student org called Business Professionals of America

The AI ignores the subject context and just compares whatever is the most common acronym.

They lazy patched it by making the model do a subject check on the result, but not on the prompt so it still comes back with the chemical lol.

Hello, fellow humans. I too am human, just like you! I have skin, and blood, and guts inside me, which is not at all disgusting. Just another day of human!

Won’t you share a delicious cup of

motor oillemonaide with me? It’s nice and refridgerated, so it will cool down our bodies without the use of cooling fans!However we too can use cooling fans. They will just be placed on the ceiling, or in a box, or self standing, and oscillating. Not at all inside our bodies, connected to a board controlled by our CPUs that we clearly don’t have!

Now come, let us take our colored paper with numbers and pictures of previous human rulers and exchange them for human food prepared by not fully adult humans who haven’t matured to the age where their brains develop the ability to care about food sanitation. Then we shall complain that our meal cost too many paper dollars, while recieving less and less potato stick products every year. Ignoring completely the risk of heart disease by indulging in the amounts of food we desire to aquire.

Finally we shall retreat to our place of residence, and complain on the internet that our elected leaders are performing poorly. Rather than

terminate the programvote the poor performing humans out, we shall instead complain that it is other humans fault for voting them in. Making no attempt to change our broken system that has been broken our entire existence, with no signs of improving. Instead every 4 years we will make an effort to write down names of people we’ve already complained about in the hopes that enough people write down the same names, and that will fix the problem.Oh. Shall I request amazon.com to purchase more fans and cooling units? The news being reported that tempatures will soon reach 130F on a regular basis, and all humans will slowly perish.

Shall I share photographs of the new CEO of starbucks who’s daily commute involves a personal jet aircraft, which surely isn’t compounding the problem at all?

First of all, yes

Second option is no

When presented with yes / no pick no, no is the clear yes

That’s a good summary. Google Gemini is no better. Type in a question and it starts off great but then devolves into other brands, other steps to do something that isn’t related to the thing you asked. It’s just terrible and someone will sue them over it next year. Just wait.